In the sprawling digital landscape of the 21st century, unstructured data—images, videos, audio clips, and more—has become the lifeblood of modern enterprises and creative endeavors alike. This explosion of visual and auditory content is both a goldmine and a formidable challenge. How do we sift through terabytes of pixels and soundwaves to find meaning, to organize, to understand? The answer lies in the rapidly evolving field of automated extraction and tagging, a technological frontier where artificial intelligence is not just an assistant but the very engine of discovery.

The journey begins with the raw, unlabeled data itself. A photograph from a security camera, a drone-captured video of a remote infrastructure project, a decade's worth of medical MRI scans—each is a collection of digital information without inherent description. For humans, reviewing this data is painstakingly slow and hopelessly prone to error at scale. This is where machine learning, particularly deep learning models, steps in. These systems are trained on vast datasets, learning to recognize patterns, objects, scenes, and even emotions with a precision that often surpasses human capability. They don't just see a picture; they parse it into a structured set of identifiable elements.

At the core of this technology are convolutional neural networks (CNNs), the workhorses of image recognition. A CNN processes an image through layers of artificial neurons, each layer detecting increasingly complex features. The initial layers might identify simple edges and textures. Deeper layers combine these to form shapes—a wheel, a window, an eye. The final layers assemble these into entire objects—a car, a building, a face. This hierarchical understanding allows the system to not only identify what is in an image but also to locate where it is, drawing bounding boxes around each detected entity. This process, known as object detection, is fundamental to transforming a chaotic image into a structured list of annotated items.

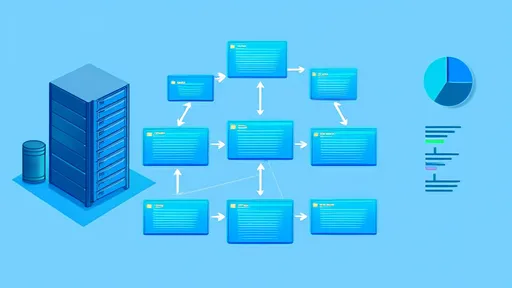

But the ambition of automation goes beyond mere identification. The next step is context. This is where semantic understanding comes into play. Advanced models are now capable of generating descriptive captions or tags that reflect the relationship between objects. Instead of just tagging "dog" and "frisbee," a sophisticated system can infer the action and generate the tag "dog catching a frisbee." This leap from object detection to scene understanding is powered by models that combine computer vision with natural language processing, creating a narrative from the visual data. This contextual tagging is invaluable for search and retrieval systems, allowing users to find content based on complex queries rather than just a list of keywords.

The applications of this technology are as diverse as the data it processes. In the realm of e-commerce, automated tagging allows retailers to instantly categorize millions of product images, enabling powerful visual search features where a user can upload a picture of a desired item and find similar products for sale. In media and entertainment, broadcasters and studios can automatically index their vast archives of footage. A producer searching for "a rainy night scene in a city with yellow taxis" can get results in seconds, a task that would have taken a team of interns weeks to complete manually.

Perhaps one of the most critical applications is in the field of content moderation. Social media platforms and online communities are inundated with user-uploaded content every second. Automated systems tirelessly scan images and videos for policy violations, detecting hate speech symbols, graphic violence, or explicit material far more quickly and consistently than human moderators ever could. While not perfect, these systems form a crucial first line of defense, protecting users and brands from harmful content at a scale that is humanly impossible to manage.

In scientific and industrial fields, the impact is equally profound. Geologists use automated analysis of satellite and drone imagery to monitor erosion, track deforestation, or identify potential mineral deposits. Radiologists employ AI-powered tools to highlight potential anomalies in medical scans, not to replace the doctor's expertise but to augment it, ensuring subtle signs of disease are not overlooked in a busy clinic. The automation of extraction and tagging is, in these contexts, a powerful force multiplier for human expertise.

However, this technological march is not without its ethical quandaries and technical hurdles. The performance of these AI models is entirely dependent on the data they are trained on. Biased training data leads to biased models. There are well-documented cases of facial recognition systems performing poorly on women and people of color because they were trained predominantly on images of white men. This raises serious concerns about fairness and discrimination when such systems are deployed in policing, hiring, or security. Ensuring algorithmic fairness is not a secondary feature but a primary engineering and ethical imperative.

Furthermore, the "black box" nature of some complex deep learning models presents a challenge. Sometimes, even the engineers who build them cannot fully explain why a model arrived at a specific conclusion or tag. This lack of interpretability can be a significant barrier in high-stakes fields like medicine or criminal justice, where understanding the "why" behind a decision is as important as the decision itself. The field of Explainable AI (XAI) is emerging as a critical area of research to make these automated processes more transparent and trustworthy.

Looking ahead, the future of automated extraction and tagging is moving towards even greater integration and sophistication. We are progressing from systems that describe what is in the data to systems that predict what it means. Predictive tagging will anticipate trends, identify emerging patterns, and provide proactive insights. The integration of multimodal AI—systems that can simultaneously process video, audio, and text—will create a holistic understanding of content. A video clip of a political speech could be automatically tagged not just with the speaker's name, but with the sentiment of their delivery, the key topics mentioned in the transcript, and the reaction of the audience.

In conclusion, the automated extraction and tagging of unstructured data is far more than a technical convenience; it is a fundamental shift in our relationship with information. It is the lens that brings the blur of big data into focus, transforming overwhelming noise into actionable intelligence. From empowering creativity to safeguarding communities and accelerating discovery, this technology is quietly building the indexed, searchable, and understandable digital world of tomorrow. The challenge for us is to steer its development with a careful hand, ensuring that the systems we build to see and understand our world are as fair, transparent, and beneficial as the future we hope to create with them.

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025