The landscape of cybersecurity insurance is undergoing a profound transformation, driven by escalating digital threats and a rapidly evolving regulatory environment. Insurers are no longer passive risk-takers; they have become active participants in shaping the cybersecurity posture of their clients. The technical requirements to even qualify for a policy have become stringent, moving beyond simple checkbox questionnaires to deep, evidence-based assessments of an organization's digital defenses. This shift represents a fundamental change in how businesses must approach their security infrastructure, not as a cost center but as a core component of their financial and operational resilience.

At the heart of these new technical prerequisites lies a demand for demonstrable security maturity. Insurers now routinely require multi-factor authentication (MFA) to be enforced across all accessible systems, particularly for remote access and privileged accounts. The absence of MFA is frequently a deal-breaker, instantly disqualifying an organization or leading to prohibitively high premiums. Similarly, robust endpoint detection and response (EDR) solutions are no longer a luxury but a baseline expectation. Insurers want proof that a company can not only detect a breach in progress but also respond to it effectively to minimize damage.

Beyond specific tools, the entire architecture of data protection is scrutinized. Insurers demand comprehensive and encrypted backups that are stored immutably and tested regularly for integrity. The ability to restore operations quickly after a ransomware attack is a critical factor in risk assessment. Furthermore, the implementation of zero-trust network architectures is increasingly favored. This model, which assumes no user or device is trustworthy by default, aligns perfectly with an insurer's goal of minimizing the attack surface and preventing lateral movement by threat actors.

The challenge for many organizations, however, is that these technical standards are a moving target. What was considered best practice last year may be inadequate today. The relentless innovation of cybercriminals forces a continuous cycle of adaptation and investment. For small and medium-sized enterprises (SMEs) with limited IT budgets, this creates a significant barrier to entry. They are caught in a catch-22: they need insurance to protect against a potentially catastrophic event, but they cannot afford the sophisticated security stack required to obtain that insurance, creating a dangerous protection gap.

Parallel to the technical hurdles is the burgeoning field of compliance, which presents its own labyrinthine challenges. The regulatory landscape is a fragmented patchwork of international, national, and industry-specific mandates. A multinational corporation must navigate the European Union's General Data Protection Regulation (GDPR), the California Consumer Privacy Act (CCPA), the Cybersecurity Law of China, and a host of other frameworks, each with its own nuances and reporting obligations. A compliance failure in one jurisdiction can invalidate an insurance claim, even if the company was fully compliant elsewhere.

This complexity is compounded by the fact that insurance policies themselves are becoming more specific about compliance requirements. Clauses mandating adherence to certain frameworks like the NIST Cybersecurity Framework or ISO 27001 are common. Following a claim, insurers will conduct a forensic audit to determine if the organization was in breach of any stated compliance obligation at the time of the incident. This post-claim scrutiny turns the insurance policy into a continuous compliance agreement, where any deviation can be grounds for denying coverage.

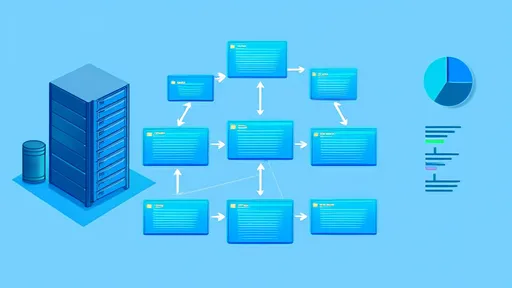

The rise of supply chain risk further muddies the waters. An organization's security is only as strong as its weakest vendor. Insurers are now deeply interested in the cybersecurity practices of third-party suppliers, partners, and software providers. Companies are being asked to vouch for the security of their entire digital ecosystem, a nearly impossible task that extends liability far beyond their direct control. This has led to the inclusion of stringent vendor management clauses in policies, requiring insured entities to conduct regular security assessments of their partners.

Perhaps the most daunting compliance challenge stems from the evolving legal interpretations of "reasonable security." This term, often cited in regulations and insurance policies, lacks a universal definition. Courts and regulators are increasingly defining it by the standards of the day, which are in constant flux. An security practice deemed reasonable when a policy was signed might be considered negligent a year later after a high-profile breach reveals new vulnerabilities. This legal uncertainty makes it difficult for organizations to have full confidence in their coverage, knowing that the goalposts can be moved after the fact.

The intersection of these technical and compliance demands is creating a new paradigm for risk management. The traditional model of buying insurance to transfer risk is being replaced by a collaborative model where insurer and insured partner to mitigate risk. Insurers are offering services like pre-binding security assessments, continuous monitoring tools at a discounted rate, and access to cybersecurity expertise. In return, they expect transparency and a commitment to meeting ever-higher security benchmarks.

In conclusion, obtaining and maintaining cybersecurity insurance is no longer a simple financial transaction. It is an ongoing strategic initiative that requires deep technical investment and rigorous compliance diligence. Organizations must view their insurance provider not just as a payer of claims but as a stakeholder in their cybersecurity program. The future will likely see even greater integration, with insurance premiums dynamically adjusted based on real-time security telemetry. For businesses, the message is clear: robust, provable, and adaptable cybersecurity is no longer optional—it is the price of admission for financial protection in the digital age.

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025