The landscape of data processing has undergone a profound transformation with the emergence of the real-time data lake, a paradigm that merges the historical depth of data warehousing with the immediacy of stream processing. This evolution represents more than just a technical shift; it is a fundamental rethinking of how organizations harness data for competitive advantage. At the heart of this revolution lies the concept of unified batch and stream processing—often termed stream-batch integration—which is rapidly becoming the cornerstone of modern data architecture.

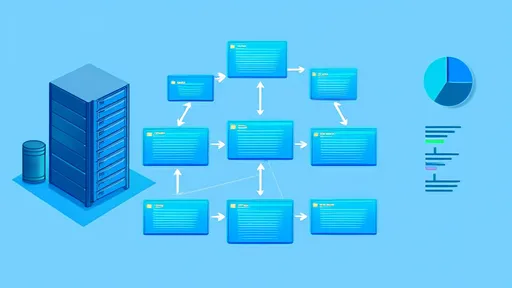

Traditional data infrastructures often operated in silos, with batch processing systems handling large volumes of historical data and stream processing engines managing real-time events. This dichotomy created significant operational overhead, data consistency challenges, and latency in deriving insights. The real-time data lake shatters these barriers by providing a single, cohesive platform where data from both batch and streaming sources can be ingested, stored, and processed seamlessly. This unification not only simplifies architecture but also enables organizations to perform complex analytics on fresh data without sacrificing the ability to query vast historical datasets.

One of the most compelling aspects of stream-batch integration within a real-time data lake is its ability to support lambda-free architectures. The lambda architecture, which maintained separate batch and speed layers, was once a popular solution for handling both historical and real-time data. However, it introduced complexity in maintaining two different codebases and merging results. With advancements in processing engines like Apache Flink, Apache Spark Structured Streaming, and rising contenders such as Apache Iceberg and Delta Lake, it is now possible to achieve low-latency processing and batch-like reliability within a single pipeline. This eliminates the need for dual systems, reducing both cost and operational friction.

The technological underpinnings of this integration are equally fascinating. Modern real-time data lakes leverage columnar file formats like Parquet and ORC, which are optimized for both write and read performance. These formats, combined with open table formats such as Apache Iceberg, Apache Hudi, and Delta Lake, bring transactional consistency to data lakes—a feature once exclusive to traditional databases. This means that organizations can now perform ACID transactions on massive datasets, ensuring data integrity while supporting concurrent reads and writes. The result is a system that not only processes data in real time but also maintains the reliability and robustness expected of batch systems.

From an operational standpoint, the benefits are substantial. Companies can now build applications that require real-time responsiveness without compromising on the ability to analyze historical trends. For instance, an e-commerce platform can use the same data lake to power real-time recommendation engines while simultaneously running batch jobs for quarterly sales analysis. This duality reduces infrastructure sprawl and allows data teams to focus on extracting value rather than managing complexity. Moreover, with the adoption of cloud-native technologies, these data lakes can scale elastically, handling petabytes of data without significant upfront investment.

However, the journey to implementing a successful real-time data lake is not without challenges. Organizations must navigate issues such as data quality, schema evolution, and governance in a dynamic environment. Ensuring that streaming data is as trustworthy as batch data requires robust data validation frameworks and metadata management. Additionally, teams need to upskill to work with new tools and paradigms, moving away from traditional ETL processes to more agile data engineering practices.

Despite these hurdles, the trend toward stream-batch integration is accelerating. Industry leaders in sectors like finance, retail, and telecommunications are already reaping the rewards of this approach. Fraud detection systems that once relied on overnight batch processing can now identify anomalies in milliseconds. Supply chain analytics can react to disruptions in real time, while customer experience platforms can personalize interactions based on the latest data. The real-time data lake, with its unified processing capabilities, is enabling a new era of data-driven decision-making.

Looking ahead, the convergence of batch and streaming will continue to evolve. Innovations in machine learning integration, edge computing, and serverless architectures are poised to further enhance the capabilities of real-time data lakes. As these technologies mature, we can expect even tighter integration between operational databases and analytical systems, blurring the lines between transactional and analytical workloads. The future of data processing is not just about speed or scale—it is about unification, simplicity, and agility.

In conclusion, the real-time data lake with stream-batch integration is more than a technological advancement; it is a strategic imperative for organizations aiming to thrive in a data-centric world. By breaking down the barriers between batch and streaming, it empowers businesses to act on insights the moment they emerge, turning data into a true asset. As adoption grows and tools mature, this architecture will undoubtedly become the new standard, reshaping how we think about and interact with data.

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025