The landscape of data management is undergoing a seismic shift, moving beyond the rigid confines of traditional relational systems into the fluid, high-dimensional realm of vector databases. As enterprises increasingly deploy AI and machine learning models in production, the demand for specialized databases capable of understanding and querying data by its meaning, rather than by exact matches, has exploded. This surge has, in turn, ignited a fierce competition among vendors, making performance benchmarking more critical—and more complex—than ever before. The old yardsticks of transactions per second and query latency are no longer sufficient; a new, more nuanced set of dimensions is required to truly gauge the capabilities of a modern vector database.

Historically, database performance was a relatively straightforward affair. Analysts would measure throughput, latency under load, and perhaps scalability, often using standardized benchmarks like TPC-C. These metrics worked well for transactional systems where operations were discrete and predictable. However, the fundamental operation of a vector database—approximate nearest neighbor (ANN) search—is a probabilistic and computationally intensive task that varies dramatically based on the data itself. A benchmark that fails to account for the intrinsic properties of vectors, the chosen indexing algorithm, or the recall rate is, at best, incomplete and, at worst, entirely misleading. The industry is now recognizing that evaluating these systems demands a bespoke framework built from the ground up.

The Heart of the Matter: Accuracy Versus Speed

At the core of any new benchmarking dimension is the eternal trade-off between accuracy and speed. In vector search, this is quantified by recall—the percentage of true nearest neighbors found in the returned results—and query latency. A benchmark that only reports queries per second (QPS) at a fixed, high recall of 99% tells one story, while a benchmark showing QPS at a more pragmatic 95% recall tells another. A robust evaluation must present a curve, illustrating the performance across a spectrum of recall values. This allows potential users to understand the performance envelope of the database and select the optimal operating point for their specific application, be it a high-recall recommendation system or a low-latency real-time fraud detection engine.

The Unignorable Influence of Data Dynamics

Another critical dimension often overlooked in simplistic tests is the behavior of the database under continuous data mutation. Traditional benchmarks frequently test on a static dataset, but production environments are anything but static. How does the database perform when new vectors are inserted, existing ones are updated, or others are deleted? The process of updating an index can be so resource-intensive that it causes significant performance degradation or even requires a full index rebuild, leading to downtime. Therefore, a modern benchmark must include tests for insertion latency, index refresh overhead, and query consistency during writes. The ability to handle real-time, streaming data at scale is what separates a academic prototype from an enterprise-grade solution.

Scalability in a Multi-Dimensional World

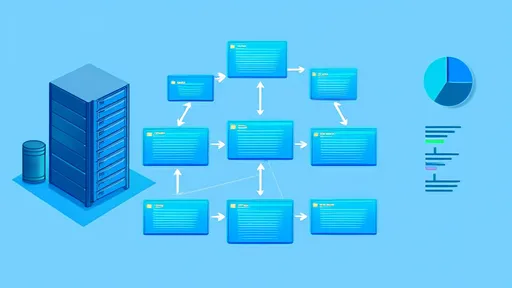

Scalability is a classic benchmark metric, but for vector databases, it has unique facets. It's not just about handling more data; it's about handling more dimensions. Scaling horizontally (adding more nodes to a cluster) and scaling vertically (adding more resources to a single node) can have vastly different performance characteristics. Furthermore, the curse of dimensionality means that adding more features to a vector (increasing its dimensions) exponentially increases the computational complexity of similarity search. A comprehensive benchmark must test scaling along three axes: the number of vectors (cardinality), the number of dimensions per vector, and the number of concurrent queries. The results paint a picture of how the database will perform as an application grows from a prototype with millions of vectors to a planet-scale system with billions.

The Ecosystem and Operational Overhead

Raw query performance is meaningless if the database is impossible to manage, monitor, and integrate into a modern data stack. Consequently, new benchmarking dimensions must encompass operational simplicity. This includes metrics like the time required to provision a cluster, the ease of performing backups and restores, the granularity of monitoring metrics exported, and the robustness of connectors for popular data tools like Spark, Kafka, and Kubernetes. The total cost of ownership (TCO) is heavily influenced by these factors. A database that offers blazing-fast queries but requires a team of dedicated experts to keep it running may ultimately be less performant from a business perspective than a slightly slower but fully managed and automated service.

Towards a Standardized and Fair Framework

The absence of a universally accepted benchmark like TPC-C for the vector database space has led to a wild west of marketing claims, with vendors often publishing results from optimised, non-representative tests. The community is now rallying to create standardized benchmarks. Initiatives are focusing on providing diverse, publicly available datasets, defining a common set of query workloads (e.g., single vector search, batch search, filtered search), and mandating full transparency of configuration parameters and hardware specs. The goal is to move from vendor-led performance reports to independent, third-party audits that provide fair and comparable results, empowering users to make informed decisions based on their specific needs.

In conclusion, the benchmark for vector databases has evolved into a multi-faceted mirror reflecting the complex realities of modern AI applications. It is no longer a single number but a comprehensive profile that balances the precision of recall against the urgency of speed, the stability of a static system against the chaos of live data, and the raw power of algorithms against the practicalities of operation. As this field continues to mature, these new dimensions will become the essential language for discussing, comparing, and ultimately selecting the foundational technology that will power the next generation of intelligent applications.

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025