The digital landscape has undergone a seismic shift. The traditional perimeter-based security model, once the bastion of network defense, is crumbling under the weight of cloud migration, remote workforces, and sophisticated cyber threats. In this new era, the principle of "never trust, always verify" has emerged as the cornerstone of modern cybersecurity. This is the world of Zero Trust, a paradigm that assumes breach and verifies each request as though it originates from an untrusted network. Within this framework, one of the most critical and dynamic capabilities is behavioral-based anomaly detection for access requests, a sophisticated layer of defense that moves beyond static credentials to understand the very rhythm of user and entity behavior.

At its core, Zero Trust is an architectural philosophy, not merely a product or a service. It dismantles the old concept of a trusted internal network versus an untrusted external one. Every access attempt, whether from inside or outside the corporate firewall, is treated with equal suspicion. Access is granted on a per-session basis, contingent upon strict identity verification and the principle of least privilege. However, verifying a username and password at the gate is no longer sufficient. Credentials can be stolen, and authorized users can turn malicious. This is where behavioral analytics transforms Zero Trust from a static gatekeeper into an intelligent, adaptive sentinel.

Behavioral-based anomaly detection operates on a simple yet powerful premise: every user and machine has a unique digital fingerprint—a pattern of normal behavior. This fingerprint encompasses a vast array of contextual signals. For a user, it might include their typical login times, geographic location, the devices they use, the sequence of applications they access, their typing speed, and even their mouse movement patterns. For a server or an IoT device, it involves monitoring data transfer volumes, communication protocols, and connection frequencies. By establishing a robust baseline of this normal activity over time, the system can identify deviations that signal a potential threat.

The technological engine powering this capability is a blend of machine learning and advanced analytics. Supervised learning models can be trained on historical data to recognize patterns associated with known attack vectors. However, the true strength lies in unsupervised and semi-supervised learning algorithms. These models do not require pre-labeled examples of attacks; instead, they continuously analyze the stream of access and activity data to identify outliers and anomalous clusters that deviate from the established baseline. This is crucial for detecting novel, previously unseen threats that bypass traditional signature-based defenses.

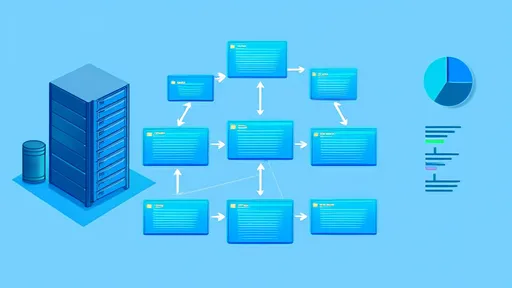

Consider a practical scenario. An employee in the finance department, based in London, typically logs in between 8:00 AM and 6:00 PM GMT, accesses the company's financial software, and then moves to email and internal wikis. The behavioral baseline understands this rhythm. Now, if a login attempt occurs for that user's credentials at 3:00 AM from an IP address in a foreign country, followed by an immediate attempt to access a sensitive database they have never used before, the system raises a high-fidelity alert. The static credentials are correct, but the behavior is utterly abnormal. The Zero Trust policy engine can then automatically trigger a step-up authentication challenge, block the session entirely, or severely limit the permissions for that session, effectively containing a potential account compromise.

Implementing such a system is not without its significant challenges. The foremost hurdle is the potential for false positives—flagging legitimate activity as anomalous. An employee working late on a critical project or traveling for business could trigger alerts, leading to alert fatigue among security analysts and potential disruption. To mitigate this, systems must be finely tuned and incorporate feedback loops where analysts can confirm or deny alerts, allowing the models to learn and refine their accuracy over time. Furthermore, establishing the initial behavioral baseline requires a period of data collection, during which the system might be less effective. Privacy is another paramount concern, as continuous monitoring of user behavior treads a fine line between security and employee surveillance, necessitating clear policies and transparent communication.

Despite these challenges, the integration of behavioral analytics into a Zero Trust architecture is becoming non-negotiable for organizations serious about security. It represents a shift from reactive security, which responds to incidents after they occur, to a proactive and predictive stance. The system is constantly learning, adapting, and looking for the subtle whispers of an attack before it can escalate into a deafening roar. It is a dynamic shield that understands context, making access control decisions not just on who you are, but on how you are acting at that very moment.

As we look to the future, the evolution of this technology is bound to be influenced by advancements in artificial intelligence. We can anticipate more sophisticated models capable of understanding even more complex behavioral patterns and correlations across vast datasets. The integration with other security telemetry, from endpoint detection and response (EDR) to cloud security posture management (CSPM), will create a holistic, organization-wide security brain. This brain will not only detect anomalies but also predict potential attack paths and automatically orchestrate responses across the entire digital estate.

In conclusion, behavioral-based anomaly detection is the intelligent heartbeat of a mature Zero Trust network. It transcends the limitations of traditional security by injecting context and continuous verification into the access control process. While implementing it requires careful consideration of data, privacy, and tuning, its value in defending against the evolving threat landscape is immeasurable. It is the difference between having a locked door and having a vigilant security guard who knows the habits of every person entering the building, capable of spotting the slightest hint of suspicious activity. In the relentless battle against cyber adversaries, this depth of insight is not just an advantage; it is a necessity.

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025