In today's rapidly evolving digital landscape, organizations face unprecedented challenges in managing identities across diverse systems and platforms. The concept of Identity Fabric has emerged as a transformative approach to address these complexities, offering a cohesive framework for unified identity management. This innovative model goes beyond traditional siloed solutions, weaving together disparate identity systems into a seamless, interoperable whole that enhances security, improves user experience, and streamlines administrative processes.

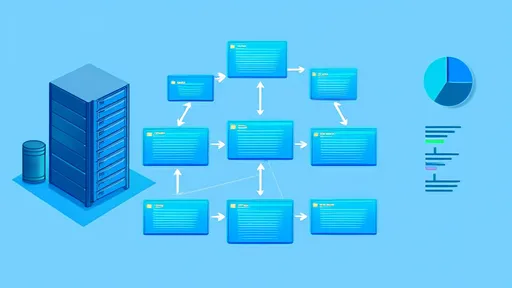

At its core, Identity Fabric represents a architectural paradigm that enables organizations to manage digital identities consistently and securely across hybrid environments. Unlike conventional identity management systems that often operate in isolation, an Identity Fabric creates a unified layer that connects various identity providers, directories, and applications. This interconnected framework allows for real-time synchronization of identity data, ensuring that users have appropriate access rights regardless of which system or service they are engaging with. The fabric metaphor is particularly apt, as it suggests both strength through interconnection and flexibility to adapt to changing organizational needs.

The driving force behind the adoption of Identity Fabric architectures is the increasingly complex nature of modern IT ecosystems. Most enterprises today utilize a combination of on-premises systems, cloud services, mobile applications, and IoT devices, each with their own identity requirements. This heterogeneity creates significant management challenges, including security gaps, inconsistent user experiences, and operational inefficiencies. By implementing an Identity Fabric, organizations can establish a centralized governance model while maintaining the distributed nature of their identity systems, effectively balancing control with flexibility.

One of the most significant advantages of the Identity Fabric approach is its ability to enhance security posture across the organization. Traditional perimeter-based security models have become increasingly inadequate in a world where users access resources from anywhere using various devices. Identity Fabric enables a zero-trust security model by providing continuous authentication and authorization capabilities. Through its interconnected structure, the fabric can monitor user behavior across systems, detect anomalous activities, and enforce security policies consistently throughout the digital environment. This comprehensive visibility and control significantly reduces the attack surface and improves an organization's ability to prevent, detect, and respond to security threats.

The implementation of Identity Fabric also revolutionizes the user experience by enabling seamless access to resources. Users no longer need to remember multiple credentials or navigate complex authentication processes for different systems. The fabric manages the complexity behind the scenes, providing single sign-on capabilities and adaptive authentication based on context and risk assessment. This not only improves productivity but also reduces the temptation for users to adopt insecure practices like password reuse. Furthermore, the consistent identity experience across platforms helps build user trust and satisfaction, which is particularly important in customer-facing applications.

From an operational perspective, Identity Fabric significantly reduces the administrative burden associated with identity management. Traditional approaches often require manual synchronization between systems, leading to errors, inconsistencies, and security vulnerabilities. The automated nature of Identity Fabric ensures that identity data remains consistent across all connected systems, reducing the need for manual intervention and minimizing the risk of human error. This automation extends to provisioning and deprovisioning processes, ensuring that access rights are promptly updated when users join, move within, or leave the organization.

The technological foundation of Identity Fabric relies on several key components and standards. Modern identity protocols such as OAuth, OpenID Connect, and SAML play crucial roles in enabling interoperability between different systems. Additionally, the fabric typically incorporates identity governance and administration tools, privileged access management capabilities, and identity analytics systems. These components work together to create a comprehensive identity ecosystem that can scale with organizational growth and adapt to emerging technologies. The use of standard protocols ensures that the fabric can integrate with existing systems while remaining future-proof against technological evolution.

Despite its numerous benefits, implementing an Identity Fabric architecture presents certain challenges that organizations must address. Legacy systems often lack the capabilities to integrate seamlessly with modern identity protocols, requiring additional investment in integration tools or system upgrades. Cultural resistance within organizations can also pose obstacles, as different departments may be accustomed to managing identities independently. Additionally, the centralized nature of identity management through a fabric raises important questions about data privacy and compliance, particularly in regulated industries where strict controls govern how identity information is handled and stored.

The evolution of Identity Fabric continues as new technologies emerge and business requirements evolve. Artificial intelligence and machine learning are increasingly being integrated into identity fabrics to enhance threat detection capabilities and automate decision-making processes. Blockchain technology shows promise for creating decentralized identity models that could complement or enhance traditional fabric architectures. As organizations continue their digital transformation journeys, the Identity Fabric concept will likely evolve to address new use cases and challenges, particularly around IoT identity management and the increasing importance of customer identity and access management.

Looking toward the future, Identity Fabric represents more than just a technological solution—it embodies a strategic approach to identity management that aligns with broader business objectives. Organizations that successfully implement Identity Fabric architectures position themselves to respond more agilely to market changes, adopt new technologies more seamlessly, and build stronger relationships with users through improved security and experiences. As digital identity continues to become central to how organizations operate and interact with stakeholders, the holistic approach offered by Identity Fabric will likely become the standard rather than the exception in enterprise identity management strategies.

The journey toward implementing an Identity Fabric requires careful planning and execution. Organizations must begin by assessing their current identity landscape, identifying pain points, and defining clear objectives for what they want to achieve with unified identity management. This assessment should include technical considerations, such as existing systems and integration capabilities, as well as business factors like compliance requirements and user experience goals. A phased approach to implementation often proves most successful, allowing organizations to demonstrate value incrementally while managing risk and complexity effectively throughout the transformation process.

In conclusion, Identity Fabric represents a paradigm shift in how organizations approach identity management. By creating a unified, interconnected framework that spans diverse systems and environments, this approach addresses the fundamental challenges of modern digital identity while enabling new capabilities and efficiencies. As organizations continue to navigate an increasingly complex digital landscape, the principles and practices of Identity Fabric offer a path toward more secure, efficient, and user-centric identity management. While implementation requires careful consideration and effort, the long-term benefits make Identity Fabric an essential consideration for any organization serious about identity management in the digital age.

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025